Bioinformatics

Variant Calling Pipeline: FastQ to Annotated SNPs in Hours

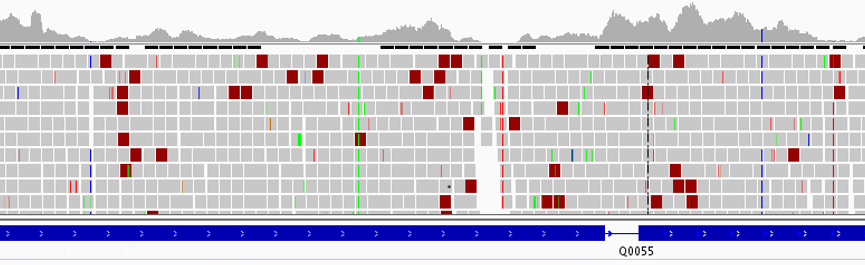

Update: This pipeline is now deprecated. See the updated version of the variant calling pipeline using GATK4. Identifying genomic variants, such as single nucleotide polymorphisms (SNPs) and DNA insertions and deletions (indels), can play an important role in scientific discovery. To this end, a pipeline has been developed to allow Read more